Pharmacovigilance Signal-to-Noise Ratio Calculator

How Social Media Pharmacovigilance Works

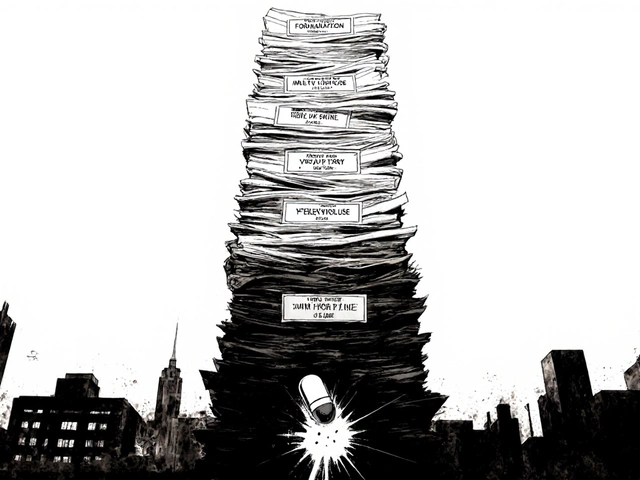

Based on article data: 68% of posts contain noise (exaggeration, jokes, unverified claims). For rare drugs (<10,000 prescriptions), false positive rate reaches 97%.

Actual Signals

0False Positives

0Every year, millions of people take prescription drugs. Most of them never report side effects. Why? Because the system is broken. Traditional adverse drug reaction (ADR) reporting catches only 5-10% of actual problems. Meanwhile, patients are talking openly on Twitter, Reddit, and Facebook-describing rashes, dizziness, panic attacks, and strange interactions with supplements. These aren’t clinical trial data. They’re raw, unfiltered, real-time stories from people living with the drugs. And for the first time, pharmaceutical companies and regulators are starting to listen.

What Is Social Media Pharmacovigilance?

Pharmacovigilance is the science of tracking drug safety after a medication hits the market. It’s not about proving a drug works in trials. It’s about finding out what goes wrong once thousands-or millions-of real people start using it. For decades, this relied on doctors filing forms, patients calling hotlines, or hospitals submitting reports. Slow. Burdensome. Incomplete. Enter social media. Since around 2014, when the European Medicines Agency and major drugmakers launched the WEB-RADR project, companies have been scraping public posts for mentions of side effects. They use AI to scan millions of tweets, forum threads, and Instagram captions for phrases like “I feel weird after taking X” or “this drug gave me migraines.” Tools like Named Entity Recognition pull out drug names, symptoms, and dosages. Topic Modeling finds patterns in language no one programmed it to look for-like slang for nausea or descriptions of hallucinations. By 2024, 73% of top pharmaceutical companies were using AI to monitor social media for safety signals. These systems process about 15,000 posts per hour. That’s more than any human team could ever handle. And it’s working-sometimes.The Real Advantage: Speed and Unfiltered Truth

The biggest win? Time. In 2023, a new diabetes drug started showing up in online discussions about sudden weight loss and confusion. By the time the first formal report reached regulators, social media had already flagged the issue 47 days earlier. That’s not a coincidence. It’s a pattern. Why? Because patients don’t wait for doctors. They go online. They ask others. They share what happened. One Reddit user, MedSafetyNurse88, described how Twitter threads revealed a dangerous interaction between a new antidepressant and St. John’s Wort-a combo not tested in clinical trials because herbal supplements aren’t regulated like drugs. That insight came from patients, not labs. Social media gives you the full picture. Not the sanitized version filtered through a clinician’s notes. It shows how people actually use the drug: skipping doses, mixing with alcohol, taking it with other meds they bought online. It reveals patterns no clinical trial could capture.The Dark Side: Noise, Lies, and Privacy

But here’s the problem: 68% of what these AI systems pull in is garbage. People joke. They exaggerate. They confuse symptoms. One post might say, “This pill made me feel like I was dying”-but the person had a panic attack, not a heart issue. Another might claim a drug caused hair loss when they were already undergoing chemotherapy. AI can’t always tell the difference. And then there’s the data gap. Ninety-two percent of social media posts lack medical history. Eighty-seven percent don’t mention dosage. One hundred percent of reports can’t be verified. You don’t know if the person is real. If they’re telling the truth. If they even took the drug. The FDA’s own 2022 guidance warned: “Robust validation is required before using this data.” That’s code for: “We’re not trusting this yet.” And the worst part? Privacy. Patients post about depression, seizures, suicidal thoughts-thinking they’re talking to friends. Then, their words get harvested by a pharmaceutical company’s AI system. No consent. No notification. In 2024, a survey of pharmacists found 38% of respondents had seen cases where sensitive health details were collected without permission. That’s not just unethical-it’s legally risky.

Ollie Newland

December 1, 2025 AT 23:51Man, I’ve seen this play out with my aunt’s antidepressant. She posted about brain zaps on Reddit, and within a week, three others had the same story. Pharma didn’t act until the FDA got 17 formal reports-but the pattern was clear online months before. This isn’t just noise, it’s a lifeline for people ignored by the system.

Michael Feldstein

December 3, 2025 AT 07:47Big pharma scraping tweets is wild, but honestly? I’m just glad someone’s listening. I’ve had side effects no doctor believed until I posted about them and found 200 others with the same thing. It’s messy, but it’s real. Let’s not throw the baby out with the bathwater.

Benjamin Sedler

December 4, 2025 AT 23:30Oh wow, so now Big Pharma is reading my drunken 3 a.m. rants about how Adderall made me see ghosts? Cool. Next they’ll subpoena my TikTok dance videos to check for ‘tremor indicators.’ This isn’t pharmacovigilance-it’s digital surveillance with a lab coat.

zac grant

December 6, 2025 AT 12:04Let’s not sugarcoat it-this is the future. AI can’t replace clinicians, but it can flag patterns humans miss. The 68% noise? Yeah, that’s real. But with better NLP and human-in-the-loop validation, we’re getting closer. The real win? Speed. That antihistamine case? Saved lives because someone posted before the form got filed.

And yes, it’s imperfect. But traditional systems are worse. We’re trading one kind of risk for a better one.

Dematteo Lasonya

December 8, 2025 AT 01:47My mom’s on a rare med. She doesn’t use social media. So her side effects? Invisible. That’s the real problem-not the noise, but the silence. We’re building a safety net that only catches people who are tech-savvy, English-fluent, and online. That’s not progress. That’s exclusion.

Jake Deeds

December 9, 2025 AT 05:51Oh please. You think a bunch of people typing ‘this drug made me feel like I was dying’ is scientific? That’s not data-that’s emotional theater. The FDA’s right to be skeptical. If we start acting on this, we’ll have lawsuits from people who ‘felt weird’ after taking Tylenol and blame it on some new antipsychotic. This is a lawsuit waiting to happen.

George Graham

December 10, 2025 AT 09:27Just saw a post in a Filipino health forum about a drug causing tingling in the feet. No one in the U.S. system picked it up because the AI doesn’t parse Tagalog well. We’re not just missing data-we’re missing entire populations. This isn’t just a tech gap. It’s a moral one.

And honestly? If your safety system can’t hear someone because they speak another language, you’re not protecting health. You’re protecting profit.

Rachel Bonaparte

December 11, 2025 AT 02:21They’re not just monitoring social media-they’re building a behavioral profile of every patient. Next thing you know, your insurance will deny coverage because your Twitter said you ‘felt weird’ after taking a pill. This is how they’ll start denying care before you even get sick. They’re turning patients into data points for corporate risk models. Wake up.

Remember when Big Tobacco said smoking was ‘just a personal choice’? Now they’re doing the same with drugs. Your pain? Your hallucinations? Your insomnia? Just ‘noise’ until it’s profitable to act. This is surveillance capitalism with a stethoscope.

And don’t get me started on how they scrape Instagram captions from people with eating disorders. They’re mining trauma for patent extensions. It’s evil. And it’s legal.

They’ll say ‘it’s for safety.’ But safety for whom? Not the people posting. For the shareholders. Always the shareholders.

I’ve seen this movie before. They’ll call it innovation. We’ll call it exploitation.

Rebecca Braatz

December 11, 2025 AT 02:44Let’s get real-this is the most powerful tool we’ve had in decades to catch hidden dangers. I’ve trained pharmacovigilance teams for 12 years. The old system? Broken. The new one? Messy, yes. But it’s alive. It’s real. It’s hearing the people who’ve been ignored for too long.

Yes, there’s noise. Yes, there’s bias. But we fix that with better AI, more diversity in training data, and more voices from the margins-not by throwing it out.

Don’t let the cynics scare you. This isn’t the end of science. It’s the beginning of a more human one.

jagdish kumar

December 13, 2025 AT 02:22Truth is a social construct. The drug doesn’t care if you tweet about it. Only the algorithm does.

Carolyn Ford

December 14, 2025 AT 19:45Wait-you’re seriously okay with a system that scans your private posts-your depression rants, your panic attack confessions-and turns them into corporate metrics? And you call this ‘progress’? This isn’t science. It’s digital voyeurism dressed up as public health. You’re normalizing the exploitation of vulnerable people’s trauma. That’s not innovation. That’s moral bankruptcy.

And don’t even get me started on how they ignore non-English speakers. You think a Mexican grandmother with diabetes is going to post in English? Of course not. So her side effects? Erased. Just like her.

This isn’t about safety. It’s about control. And you’re all just cheering while they build the cage.

Gillian Watson

December 15, 2025 AT 07:37My sister took a new migraine med. She posted about the weird taste and dizziness on Reddit. Two weeks later, someone else had the same thing. No one called the doctor. But the company found it. Updated the label. That’s it. No fanfare. Just… better safety. Sometimes the quiet wins matter most.

Gareth Storer

December 16, 2025 AT 07:33So let me get this straight-we’re trusting AI to parse ‘my brain feels like jelly’ as a neurological side effect? And you’re not worried about someone writing that after eating too much cheese? This is why we can’t have nice things.

Pavan Kankala

December 17, 2025 AT 06:17They’ll use this to blame patients for side effects. ‘You posted about it, so you must’ve known.’ Classic. They’ll make you complicit in your own harm.

Martyn Stuart

December 19, 2025 AT 01:38Here’s the thing no one talks about: the training data. Most AI models are trained on English, U.S.-centric posts. That means if you’re in rural India or Nigeria, your symptoms won’t be recognized. Not because they’re not real-but because the system was never built to see you. That’s not bias. It’s negligence.

And the cost? Real people dying quietly. While we debate algorithms, someone’s kid is having a seizure and no one’s listening because their mom posted in Urdu.

We need diversity in data, not just in ethics panels.

Jessica Baydowicz

December 19, 2025 AT 20:46Y’all are overthinking this. I posted about my weird rash after taking that new blood pressure med. Someone replied, ‘Same! I thought I was the only one!’ Then the company reached out-no lawsuit, no drama-just asked if I’d talk to their med team. We did. They changed the warning label. That’s it. That’s the win.

It’s not perfect. But it’s better than waiting for a doctor to notice you’re not sleeping for three weeks.

Yasmine Hajar

December 21, 2025 AT 11:56My cousin took a new antipsychotic and started having night terrors. She didn’t go to her doctor-she posted on a subreddit. Within 48 hours, 37 people had the same story. One had a family member who died from it. The company didn’t wait for a formal report. They issued a safety alert. That’s what this is. Not data mining. Community healing.

Yes, there’s noise. But there’s also truth. And sometimes, truth only speaks when it’s anonymous.

Karl Barrett

December 22, 2025 AT 03:29This is the intersection of ethics, technology, and human vulnerability. We’re not just talking about side effects-we’re talking about who gets heard, who gets ignored, and who gets punished for being honest. The real question isn’t whether AI can detect a rash in a tweet. It’s whether we’re willing to treat patients as people, not data points.

If we use this tool without fixing the power imbalance, we’ll just automate injustice. And that’s the most dangerous side effect of all.

Augusta Barlow

December 22, 2025 AT 14:11They’re using this to predict who’ll sue. They’re tagging posts with ‘high litigation risk’ and flagging users as ‘potential plaintiffs.’ That’s why they’re so eager to collect this data-not to protect you, but to prepare for when you try to hold them accountable. This isn’t safety. It’s legal reconnaissance. And you’re handing them your trauma on a silver platter.

They’ll say it’s ‘for your protection.’ But they’re really protecting their balance sheets. Every post you make is a line in their risk assessment spreadsheet. You’re not a patient. You’re a liability.

And don’t think they don’t know how to manipulate this. They’ll bury dangerous signals in noise until the patent expires. It’s happened before. With Vioxx. With Zoloft. With Paxil. They’ll do it again.

So go ahead. Post about your side effects. Just know: they’re not listening to help you. They’re listening to protect themselves.

And if you think this is progress? You’re the real side effect.